1. Equation solving tools

We distinguish between root finding or solving algebraic equations and solving differential equations.

It is also useful to distinguish between approximate solution using numeric methods and exact solution.

1.1. Exact solution using sympy

We can solve systems of equations exactly using sympy’s solve function. This is usually done using what is known as the residual form. The residual is simply the difference between the LHS and RHS of an equation, or put another way, we rewrite our equations to be equal to zero:

\begin{align} x + y &= z \\ \therefore x + y - z &= 0 \end{align}

[1]:

import sympy

sympy.init_printing()

%matplotlib inline

[2]:

x, y, z = sympy.symbols('x, y, z')

[3]:

sympy.solve(x + y - z, z)

[3]:

We can solve systems of equations using solve as well, by passing a list of equations

[4]:

equations = [x + y - z,

2*x + y + z + 2,

x - y - z + 2]

unknowns = [x, y, z]

[5]:

solution = sympy.solve(equations, unknowns)

solution

[5]:

[6]:

%%timeit

sympy.solve(equations, unknowns)

13.5 ms ± 4.97 ms per loop (mean ± std. dev. of 7 runs, 100 loops each)

Notice that the result is a dictionary. We can get the individual answers by indexing (using [])

[7]:

solution[x]

[7]:

We often need the numeric value rather than the exact value. We can convert to a floating point number using .n()

[8]:

solution[x].n()

[8]:

1.2. Special case: linear systems

For linear systems like the one above, we can solve very efficiently using matrix algebra. The system of equations can be rewritten in matrix form:

[9]:

equations

[9]:

[10]:

A = sympy.Matrix([[1, 1, -1],

[2, 1, 1],

[1, -1, -1]])

b = sympy.Matrix([[0, -2, -2]]).T

[11]:

A.solve(b)

[11]:

[12]:

%%time

A.solve(b)

CPU times: user 2.49 ms, sys: 882 µs, total: 3.37 ms

Wall time: 10 ms

[12]:

We can repeat the solution using numpy. This is considerably faster than using sympy for large matrices.

[13]:

import numpy

[14]:

A = numpy.matrix([[1, 1, -1],

[2, 1, 1],

[1, -1, -1]])

b = numpy.matrix([[0, -2, -2]]).T

[15]:

numpy.linalg.solve(A, b)

[15]:

matrix([[-1.33333333],

[ 1. ],

[-0.33333333]])

[16]:

%%time

numpy.linalg.solve(A, b)

CPU times: user 113 µs, sys: 24 µs, total: 137 µs

Wall time: 129 µs

[16]:

matrix([[-1.33333333],

[ 1. ],

[-0.33333333]])

The numpy version is much faster, even for these small matrices. Let’s try that again for a bigger matrix:

[17]:

N = 30

bigA = numpy.random.random((N, N))

[18]:

bigb = numpy.random.random((N,))

[19]:

%%timeit

numpy.linalg.solve(bigA, bigb)

40.6 µs ± 1.3 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

[20]:

bigsymbolicA = sympy.Matrix(bigA)

[21]:

bigsimbolicb = sympy.Matrix(bigb)

[22]:

%%timeit

bigsymbolicA.solve(bigsimbolicb)

1.18 s ± 69 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

Wow! That takes about a million times longer.

1.3. Nonlinear equations

In some cases, sympy can solve nonlinear equations exactly:

[23]:

x, y = sympy.symbols('x, y')

[24]:

sympy.solve([x + sympy.log(y), y**2 - 1], [x, y])

[24]:

We can also specify the kinds of solutions we are interested in by increasing our specifications on the symbols. The answer above contained a complex answer. In engineering we often want only real solutions

[25]:

x, y = sympy.symbols('x, y', real=True)

[26]:

sympy.solve([x + sympy.log(y), y**2 - 1], [x, y])

[26]:

But sometimes nonlinear equations don’t admit a closed-form solution:

[27]:

unsolvable = x + sympy.cos(x) + sympy.log(x)

sympy.solve(unsolvable, x)

---------------------------------------------------------------------------

NotImplementedError Traceback (most recent call last)

<ipython-input-27-8845e2a074b6> in <module>()

1 unsolvable = x + sympy.cos(x) + sympy.log(x)

----> 2 sympy.solve(unsolvable, x)

~/anaconda3/lib/python3.6/site-packages/sympy/solvers/solvers.py in solve(f, *symbols, **flags)

1063 ###########################################################################

1064 if bare_f:

-> 1065 solution = _solve(f[0], *symbols, **flags)

1066 else:

1067 solution = _solve_system(f, symbols, **flags)

~/anaconda3/lib/python3.6/site-packages/sympy/solvers/solvers.py in _solve(f, *symbols, **flags)

1632

1633 if result is False:

-> 1634 raise NotImplementedError('\n'.join([msg, not_impl_msg % f]))

1635

1636 if flags.get('simplify', True):

NotImplementedError: multiple generators [x, cos(x), log(x)]

No algorithms are implemented to solve equation x + log(x) + cos(x)

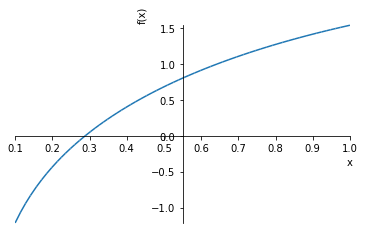

1.3.1. Numeric root finding

In such cases we need to use approximate (numeric) solutions. When finding roots numerically it is a good idea to produce a plot if possible:

[28]:

sympy.plot(unsolvable)

[28]:

<sympy.plotting.plot.Plot at 0x11ee4c198>

We see the root is between 0 and 1 and there appears to be an asymptote at 0. Let’s zoom in a bit

[29]:

sympy.plot(unsolvable, (x, 0.1, 1))

[29]:

<sympy.plotting.plot.Plot at 0x11f2dd908>

Sympy.nsolve will attempt to find a root starting near a starting point. 0.3 looks like a good first guess.

[30]:

sympy.nsolve(unsolvable, x, 0.3)

[30]:

If we’re going to be using numeric methods anyway, we can also use the routines in scipy.optimize to solve equations:

[31]:

import scipy.optimize

The function sympy.lambdify can be used to build a function which evaluates sympy expressions numerically:

[32]:

plus_two = lambda x: x+2

[33]:

plus_two(2)

[33]:

[34]:

def plus_two(x):

return x + 2

[35]:

unsolvable_numeric = sympy.lambdify(x, unsolvable)

[36]:

unsolvable_numeric(0.3)

[36]:

This is the kind of thing we can pass to scipy.optimize.fsolve

[37]:

scipy.optimize.fsolve(unsolvable_numeric, 0.1)

[37]:

array([0.28751828])

fsolve works for multiple equations as well, just return a list:

[38]:

def multiple_equations(unknowns):

x, y = unknowns

return [x + y - 1,

x - y]

[39]:

multiple_equations([1, 2])

[39]:

[40]:

first_guess = [1, 1]

scipy.optimize.fsolve(multiple_equations, first_guess)

[40]:

array([0.5, 0.5])

1.3.2. Downsides of numerical solution

Remember the downsides of numerical solution:

Approximate rather than exact

Requires an initial guess

Slower to solve the equation every time rather than solving it once and then substituting values.

Typically only finds one solution, even if there are many.

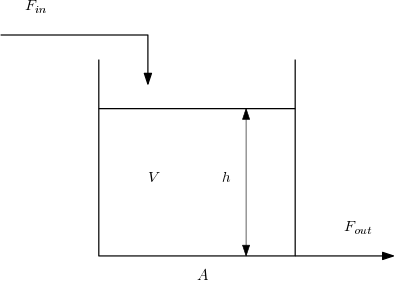

1.4. Differential equations

Now for differential equations.

We’ll solve the “classic” tank problem:

\begin{align} F_{out} &= kh\\ \frac{\mathrm{d}h}{\mathrm{d}t} &= \frac{1}{A}\left(F_{in} - F_{out}\right)\\ \end{align}

1.4.1. Analytic solution

Sympy can solve some differential equations analytically:

[41]:

h = sympy.Function('h') # This is how to specify an unknown function in sympy

t = sympy.Symbol('t', positive=True)

[42]:

Fin = 2

K = 1

A = 1

[43]:

Fout = K*h(t)

Fout

[43]:

We use .diff() to take the derivative of a function.

[44]:

de = h(t).diff(t) - 1/A*(Fin - Fout)

de

[44]:

Here we calculate the general solution. Notice this equation just satisfies the original differential equation when we plug it in, we don’t have specific values at points in time until we specify boundary conditions.

[45]:

solution = sympy.dsolve(de)

solution

[45]:

We need a name for the constant of integration which Sympy created. Expressions are arranged as trees with the arguments as elements. We can navigate this tree to get the C1 element:

[46]:

C1 = solution.rhs.args[1].args[0]

We can find the value of the constant by using an initial value:

[47]:

h0 = 1

[48]:

constants = sympy.solve(solution.rhs.subs({t: 0}) - h0, C1)

constants

[48]:

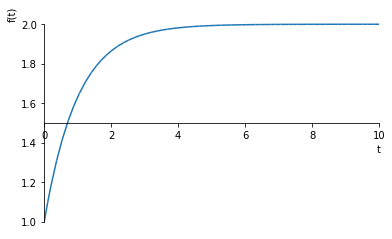

Let’s see what that looks like

[49]:

import matplotlib.pyplot as plt

%matplotlib inline

[50]:

sympy.plot(solution.rhs.subs({C1: constants[0]}), (t, 0, 10))

[50]:

<sympy.plotting.plot.Plot at 0x10209a5d68>

1.4.2. Numeric solution

When the boundary conditions of differential equations are specified at \(t=0\), this is known as an Initial Value Problem or IVP. We can solve such problems numerically using scipy.integrate.solve_ivp.

[51]:

import scipy.integrate

[52]:

Fin = 2

[53]:

def dhdt(t, h):

"""Function returning derivative of h - note it takes t and h as arguments"""

Fout = K*h

return 1/A*(Fin - Fout)

solve_ivp will automatically determine the time steps to use, integrating between the two points in tspan:

[54]:

tspan = (0, 10)

[55]:

sol = scipy.integrate.solve_ivp(dhdt, tspan, [h0])

We’ll need a smooth set of time points to evaluate the analytic solution

[56]:

tsmooth = numpy.linspace(0, 10, 1000)

hanalytic = 2 - numpy.exp(-tsmooth)

[57]:

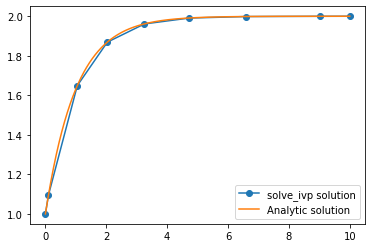

plt.plot(sol.t, sol.y.T, 'o-', label='solve_ivp solution')

plt.plot(tsmooth, hanalytic, label='Analytic solution')

plt.legend()

[57]:

<matplotlib.legend.Legend at 0x1520b9e6a0>

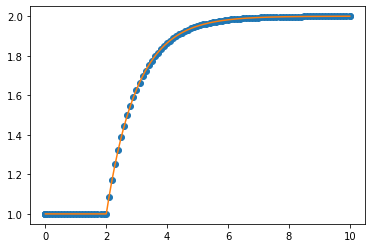

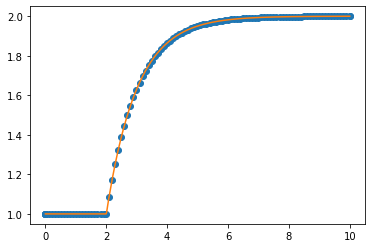

Notice that solve_ivp is taking really large steps but is still getting a really accurate solution. Of course, because we are taking such big steps to solve the differential equation, we now have a problem of interpolating between those points. The linear interpolation is clearly not very good, so solve_ivp supplies an extra argument which allows us to specify points we want the solution at. Note that this does not change the step size. The same steps are used internally and are then

interpolated using a smooth function which is known to approximate the solution to the differential equation well.

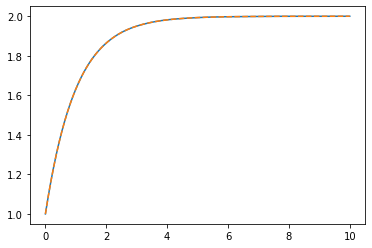

[58]:

sol = scipy.integrate.solve_ivp(dhdt, tspan, [h0], t_eval=tsmooth)

[59]:

plt.plot(tsmooth, sol.y.T)

plt.plot(tsmooth, hanalytic, '--')

[59]:

[<matplotlib.lines.Line2D at 0x1520beb908>]

We can see that this interpolation is very close to the correct solution.

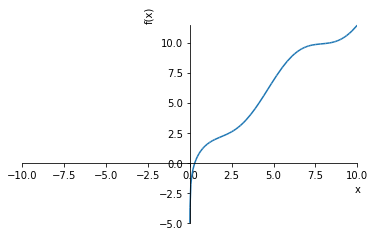

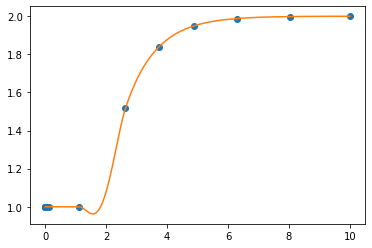

There is a problem with taking big steps if inputs change discontinuously. This example illustrates the problem:

[60]:

import scipy.integrate

[61]:

def Fin(t):

""" A step which starts at t=2 """

if t < 2:

return 1

else:

return 2

[62]:

def dhdt(t, h):

Fout = K*h

return 1/A*(Fin(t) - Fout)

[63]:

tspan = (0, 10)

[64]:

sol = scipy.integrate.solve_ivp(dhdt, tspan, [h0])

smoothsol = scipy.integrate.solve_ivp(dhdt, tspan, [h0], t_eval=tsmooth)

[65]:

plt.plot(sol.t, sol.y.T, 'o')

plt.plot(smoothsol.t, smoothsol.y.T)

[65]:

[<matplotlib.lines.Line2D at 0x1520cab358>]

That downward bump in the level is a numerical anomaly due to the places where the samples are taking during integration. To make sure that we don’t miss the moment when the step occurs, we can limit the step size that solve_ivp uses.

[66]:

sol = scipy.integrate.solve_ivp(dhdt, tspan, [h0], max_step=0.1)

smoothsol = scipy.integrate.solve_ivp(dhdt, tspan, [h0], t_eval=tsmooth, max_step=0.1)

[67]:

plt.plot(sol.t, sol.y.T, 'o')

plt.plot(smoothsol.t, smoothsol.y.T)

[67]:

[<matplotlib.lines.Line2D at 0x1520d8ee48>]

This works, but we pay for this with computer time:

[68]:

%%timeit

sol = scipy.integrate.solve_ivp(dhdt, tspan, [h0])

3.25 ms ± 207 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

[69]:

%%timeit

sol = scipy.integrate.solve_ivp(dhdt, tspan, [h0], max_step=0.1)

19.5 ms ± 266 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

1.4.2.1. A note about odeint

The default solver for ODEs in scipy used to be odeint, but this is now officially deprecated. You may still encounter it in older codes, so take note of the differences:

[70]:

def odeintdhdt(h, t):

"""Odeint expects a function with the arguments reversed from solve_ivp"""

return dhdt(t, h)

The order in which the initial values and the input times are specified is different. Also, unlike solve_ivp, you always give odeint the times you want the results at.

[71]:

odeinth = scipy.integrate.odeint(odeintdhdt, h0, tsmooth)

[72]:

plt.plot(sol.t, sol.y.T, 'o')

plt.plot(tsmooth, odeinth)

[72]:

[<matplotlib.lines.Line2D at 0x11eca3518>]