2. The problem with simple math on computers

Have you ever considered how computers store numbers? Can you explain why this happens?

[1]:

a = 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1

a

[1]:

0.9999999999999999

[2]:

a == 1.

[2]:

False

[3]:

b = 0.125 + 0.125 + 0.125 + 0.125 + 0.125 + 0.125 + 0.125 + 0.125

b

[3]:

1.0

[4]:

b == 1.

[4]:

True

2.1. Computers use base 2 instead of base 10

You’ve heard that computers are all about ones and zeros, right? What does this mean?

When I write a “normal” number like 123, what I mean is \(1\times10^2 + 2\times 10^1 + 3 \times 10^0\). This idea is called base 10 or decimal representation. Computers use binary or base 2 representation. This means you would write \(101_2 = 5_10\), with the subscript representing the base. The math would work out as \(1\times 2^2 + 0\times2^1 + 1\times 2^0\), just like in the 123 example.

This representation is exact for integers, but we run into problems when we use fractions. For instance, we all know that \(1/3\) doesn’t have a finite representation in decimals, since \(1/3 = 0.\overline{33} = 3\times 10^{-1} + 3\times10^{-2}+\cdots\) forever. Notice that in base 3, 1/3 works out fine as \(0.1_3\) since \(1/3 = 1\times3^{-1}\) exactly. So here’s the problem with writing \(0.1\) in binary:

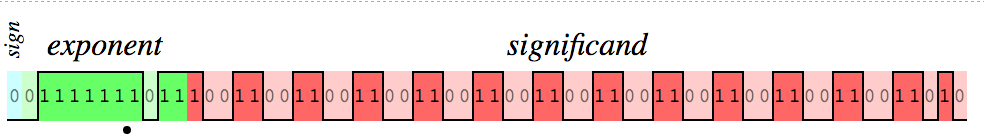

This visualisation shows how IEEE floats are represented and indicates the repeating structure of the representation of 0.1.

We can see that the binary representation is not finite, so the computer treats 1/10 more like a number like 1/7 (which we all know has an infinite decimal representation).

There is a great deal more information on this issue at these pages:

The Floating-Point Guide - this is an easy-to-read page with lots of examples

What Every Computer Scientist Should Know About Floating-Point Arithmetic - a more in-depth analysis of floating-point with lots of math

2.2. Solutions

2.2.1. Built-in to Python

The solution that Python supplies in the standard library is the decimal module:

[5]:

import decimal

[6]:

a = decimal.Decimal('0.1')

[7]:

a = 1/decimal.Decimal(10)

[8]:

sum(a for i in range(10))

[8]:

Decimal('1.0')

[9]:

sum(0.1 for i in range(10))

[9]:

0.9999999999999999

2.2.2. Sympy

Sympy also has a solution in the form of a Rational object

[10]:

import sympy

sympy.init_printing()

[11]:

b = sympy.Rational('0.1')

[12]:

b

[12]:

We can also use sympy.nsimplify.

[13]:

b = 1

c = 10

[14]:

a = b/c

[15]:

type(a)

[15]:

float

[16]:

sympy.nsimplify(a)

[16]:

2.3. Why isn’t math always done in base 10?

The extra precision comes at a cost.

[17]:

%%timeit

a = 0.1

s = 0

for i in range(100000):

s += a

5 ms ± 321 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

[18]:

%%timeit

a = decimal.Decimal('0.1')

s = decimal.Decimal(0)

for i in range(100000):

s += a

10.8 ms ± 246 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

[19]:

%%timeit

a = sympy.Rational(1, 10)

s = 0

for i in range(100000):

s += a

2.03 s ± 166 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

Using sympy rationals is about a thousand times slower than using built-in Python floats.

2.4. Forcing rounding of exact representations

If an equation results in an “Exact” answer which isn’t “useful”, like \(\sqrt{3}x\), we can approximate that using sympy.N

[20]:

x = sympy.Symbol('x')

[21]:

expr = sympy.sqrt(3)*x

expr

[21]:

[22]:

sympy.simplify(expr**2)

[22]:

[23]:

sympy.simplify(sympy.N(expr, 3)**2)

[23]:

[ ]: